(The following is generated from a voice transcription, and my prototype prompt design library)

The last week I went down a giant rabbit hole on AI. A few weeks ago, I was out walking with a good friend. We usually walk and talk business for a few hours. This time we asked: what if we could automate the entire business? Not just bits, but as much as possible. I’m nowhere near that yet, but the idea stuck. It made me look at all the moving parts of my business and wonder how much more we could hand over to AI and agents to handle on their own.

Coding Isn’t Enough

I already use Cursor for coding. It has access to the repo and can use web search, but it doesn’t really understand the business. So this week I tried to untangle that. The recurring issue for us is simple: we’re great at getting features built, but not always great at marketing them. And with AI, you can’t rely on intuition or shorthand—you have to be explicit. I tend to keep things in my head and write short task descriptions. That doesn’t work with an LLM. You have to feed it all the context or it just guesses.

I’m still figuring out the balance between too much and too little detail, but it’s clear the AI needs far more structure than I’m used to giving. The less ambiguity it gets, the more deterministic and reliable its output becomes.

First Step: Formalizing the Workflow

I started by formalizing the early steps in our process. Cursor commands and rules helped. The first part was pre-planning: fleshing out an idea, not jumping into implementation. I tried to define what we want to do, what we won’t do, and how it fits with Shopify’s APIs and what our users expect. That meant a mix of research, writing, and market context.

Then came planning—turning the idea into something scoped and actionable. Cursor can handle that if I feed it the output from the pre-planning stage. From there it naturally flows into implementation, and eventually a hand-off to marketing.

Right now that hand-off is manual. When I finish a feature, I write a changelog, generate visuals from Figma templates, create a blog post and social content, and update help docs. We follow a Basecamp checklist, but it’s all still manual. I started thinking—could an agent do parts of this? Maybe it could monitor Shopify changelogs and suggest new ideas automatically. But that would require real product awareness, not just data access, which I’m not sure any of these tools actually have yet.

The Context Problem

That question led me down the context rabbit hole. ChatGPT doesn’t know my business deeply enough. So I started looking into RAG—retrieval augmented generation. The idea sounded promising: index company knowledge and let the AI query it through MCP tools. In theory, I could connect everything—Intercom (help center), Google Drive, Shopify blog, our websites, GitHub, and more.

In practice, it’s messy. Finding the information isn’t the problem—it’s keeping it up to date. Vector databases are easy enough to set up, but reliable connectors that sync continuously are still rare. I’d rather use something off the shelf than build and maintain it, but most options are early and incomplete.

I found Ragie, which looked like the best option at the moment because of its connectors, but even that has gaps. Web scrapers can fill in public data, but not private or code-related context. After some trial and error, I started thinking that real-time crawling and search might be the better approach for now, at least until the RAG ecosystem matures. It feels less elegant, but it’s practical.

It also made me realize that to make this work, I’d need to break tasks into smaller, well-defined steps so that each one can gather only the “need to know” context it actually requires. The more tightly scoped the context, the more predictable and relevant the output.

Improving Structure with Cursor Rules

While I was doing that, I started using Cursor rules more deliberately. They’re meant for architecture and coding standards, but I realized they could also describe company preferences—our tone, product values, and general way of working.

So I began splitting them up: marketing-related rules, coding rules, research standards, documentation structure, and design guidelines. It’s still rough, but it helped. I could start to see how AI could follow the same internal standards we use across everything—writing, research, design, and code.

This kind of structure is what starts to make LLM behavior deterministic. The clearer the instructions, constraints and “need to know” context, the more consistently it performs. The moment you remove ambiguity, it stops “guessing” and starts “executing.”

Orchestration and the Multi-Agent Question

That led me to the next rabbit hole: how to get multiple agents to work together without chaos. I tried to understand OpenAI’s Agents SDK, which looks like a promising framework. It gives you a way to wire agents and MCP tools together, but it’s very early. You can’t trigger it outside a chat, which makes it hard to automate properly. I kept thinking: it would be so much more useful if it could run from webhooks, task labels, or scheduled jobs.

I looked at CrewAI, which tried a “manager and employees” model for multi-agent work. Interesting concept, but a bit unpredictable in practice. n8n looks more solid—it’s got proper workflow triggers and integrations—but it feels heavy-handed for something this experimental.

Honestly, it’s all still changing too fast. APIs, standards, and even OpenAI’s core responses format keep evolving. So I’m trying not to lock myself in anywhere. My takeaway so far is that my AI toolkit should be general, portable, and platform-agnostic. And I need to own my data, making it accessible wherever I decide to connect it later.

The Doer + Validator Model

At some point I realized I should stop chasing tools and just map out the actual process. What are the distinct stages between idea and market? What goes in, what comes out, and how do I know each part is “good enough”?

I came up with about twelve steps and started experimenting with a Doer + Validator setup. One agent (the Doer) produces the artifact, another (the Validator) checks it. If it fails, the Validator re-prompts with context and feedback, then reruns.

The pattern feels promising because it creates feedback loops that make LLMs behave more deterministically over time. Each step has clear constraints, validation rules, and “need to know” context that the next step can trust. Less drift, more control.

Tooling Reality Check

We’ve used Basecamp for years, and while it’s perfect for small-team communication, it’s terrible for integration. The API is awkward and the webhooks lack detail. I admire 37signals’ simplicity, but it doesn’t fit this automation direction.

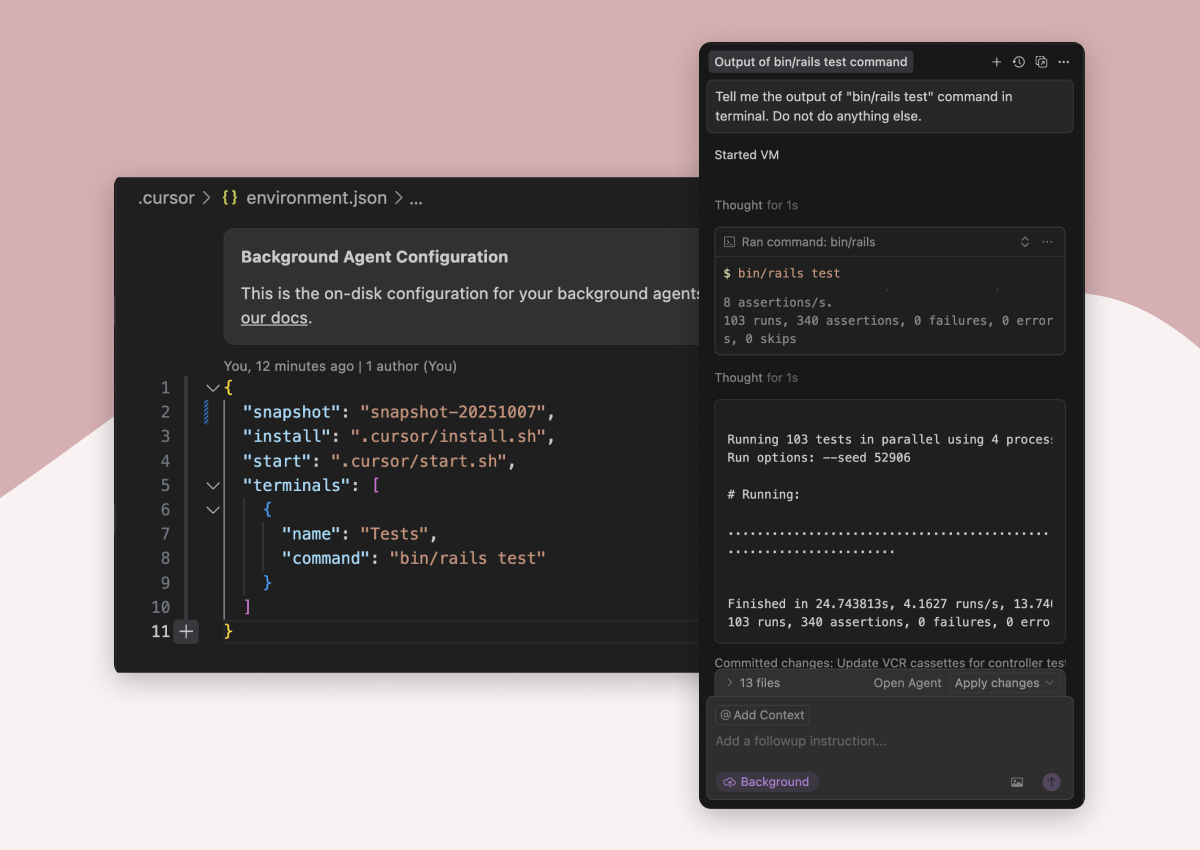

So I started trying Linear, which now integrates with Cursor’s background agents. It’s early but interesting. Background agents can take a Linear task and attempt to complete it automatically. The downside is that they run in a basic cloud VM with none of our dependencies. I tried setting one up—it worked sometimes, but mostly not.

That said, I think this will get better. I’m just not sure when. For now, I’m still using Cursor on my local setup for everything because it’s more predictable.

Building the Prompt Design System

At this point, I realized I was basically building a prompt design system without meaning to. So I made it official. I started using 11ty to manage it—a Markdown-based static site generator that fit perfectly for what I needed.

What I’ve ended up with is an AI Prompting System that keeps everything modular and composable. It generates structured prompts that tell each agent what to do, what to know, what good looks like, what tools to use, and what to output.

But here’s the key realization: this isn’t just about telling the AI what to do—it’s about communicating to it how we prefer things done, and what it needs to know to do it well. Our voice and brand run through everything: research, writing, coding, design, documentation, and communication. The system’s purpose isn’t only to automate work—it’s to express our reasoning, context, and preferences in a way an AI can consistently interpret and act on. The clearer and more structured the communication, the more predictable the results.

System Design Overview

Everything lives as Markdown and YAML inside an /prompts/ folder structured around reusable data and task definitions.

Core Goal

The system’s goal is to maintain a composable, Markdown-driven prompt generator that defines every phase of a semi-automated AI workflow. Each generated prompt communicates to an agent exactly what to do, what to know, what good looks like, what tools to use, and what to output—while ensuring the brand’s tone, logic, and preferences carry through every domain, whether it’s code or content.

How It’s Built

- It uses 11ty to compile Markdown, not HTML.

- Shared entities—teams, products, task types, and artifacts—live in

_data/*.yamlfiles and define reusable company-wide context. - Each task references those entities by key, so every prompt inherits the correct voice, constraints, and product expectations.

- Liquid templates merge everything into a final prompt—section by section.

- Shared data changes cascade through all dependent tasks automatically.

- The generator outputs pure Markdown prompts that can be pasted into tools like Cursor, ChatGPT, or Claude.

Folder Structure

/prompts/

_data/

teams.yaml

products.yaml

task_types.yaml

artifacts.yaml

tasks/

market_intel.md

prepare_pitch.md

shape_pitch.md

dev_planning.md

dev_implementation.md

dev_docs.md

marketing_planning.md

marketing_assets.md

marketing_content.md

marketing_post_launch.md

_layouts/

base.liquid.md

task.liquid.md

Each file in /tasks/ defines one step in the idea-to-market workflow. It specifies the team, product, task type, and artifacts it produces. This way, each prompt is context-aware—knowing not only what to do, but also how to sound, what standards to follow, and what to hand off next.

Data Model

The _data/ directory acts as a company brain:

-

teams.yamldefines organizational context, sources and constraints. -

products.yamldefines product-specific knowledge sources, and rules. -

task_types.yamldefines patterns for how we approach different kinds of work—research, implementation, testing, content creation, etc. -

artifacts.yamldefines specific instructions, input/output specs, constraints, and examples that reflect what good looks like to us.

Layout Logic

11ty’s eleventyComputed turns simple keys into full data objects. Liquid templates then render the prompts dynamically:

---

layout: base.liquid.md

---

# {{ name }}

## GOAL

{{ output_artifact.description }}

## BACKGROUND INFORMATION

**{{ product.name }}**

{{ product.description }}

{%- for constraint in product.constraints %}

- {{ constraint }}

{%- endfor %}

**{{ task_type.name }}**

{{ task_type.description }}

{%- for constraint in task_type.constraints %}

- {{ constraint }}

{%- endfor %}

## STEPS

1. **Understand the task**

{%- for artifact in input_artifacts %}

{%- for constraint in artifact.input_constraints %}

- {{ constraint }}

{%- endfor %}

{%- endfor %}

{%- for instruction in output_artifact.instructions %}

1. {{ instruction.title }}

{%- for step in instruction.steps %}

- {{ step }}

{%- endfor %}

{%- endfor %}

{%- if output_artifact.example %}

## EXAMPLE

```

{{ output_artifact.example }}

```

{%- endif %}

## OUTPUT CONSTRAINTS

{%- if output_artifact.output_constraints %}

{%- for constraint in output_artifact.output_constraints %}

- [ ] {{ constraint }}

{%- endfor %}

{%- endif %}

The result is a self-documenting, structured prompt library that standardizes both process and preference. Each agent receives clear communication—what we expect, how we prefer things done, and where its work fits in the larger flow. That structure makes its behavior more deterministic and its output more aligned with our expectations.

Epiphany: Communicating Brand and Context

The biggest insight so far is that this isn’t really about automation—it’s about communication. I’m trying to communicate to the AI not just context, but more specifically our preference for how to do things. That includes our vision, tone, how we reason through trade-offs and which details matter or don’t.

Structured prompts are the best way I’ve found to do that. They define what’s relevant, what’s off-limits, and how the final result should look. Every constraint, rule, and example narrows interpretation and improves alignment with our specific needs.

Why Structure Still Feels Like the Way Forward

Is building a prompting library even the right approach when agents are becoming more autonomous? I’m honestly not sure. But right now, it feels like the safest bet.

The plumbing will keep changing, but the constants seem to be:

- What to do

- What good looks like to us

- What tools to we use

- What context the AI needs to know for this specific task

- And—most importantly—how to communicate to the AI how we prefer things done

The clearer those elements are, the less random the output becomes. Structure is the language that turns open-ended models into reliable systems.

The Practical Takeaway

I’m learning that documenting every process in detail is crucial—but communicating to the AI how we prefer things done, and what it needs to know to do it, is what makes its behavior consistent. It’s about encoding both reasoning and context—so it understands not just what success looks like, but our version of success. Once that’s clear, the AI stops approximating and starts performing in line with how we work.

It’s tedious work—writing down every step, rule, and relevant piece of context—but it’s what turns an LLM from a creative assistant into a deterministic extension of our workflow.

Moving Forward

I’ll keep refining this prompting system—both the technical foundation and the communication layer that defines our brand preferences and “need to know” context. I’m sure I’ll rebuild parts of it again once orchestration tools mature. For now, breaking the process into smaller validated steps feels like a stable foundation.

If others are experimenting with similar multi-agent setups, I’d love to compare notes. I’m still figuring it out, and a lot of this is trial and error. But the direction feels right—AI that not only does the work, but understands what it needs to know and how we prefer it done.

Overall Conclusions

- Avoid vendor lock-in. Everything’s changing too fast to commit to one stack.

- Keep the toolkit general and portable. It should work in all AI tools, not just the current hotness.

- Own the data. Make it easy for humans and agents to access in structured ways.

- Communicate clearly to the AI how we prefer things done, and what it needs to know. Across research, writing, design, and code.

- Structured prompts are key. They make that communication unambiguous and repeatable.

- Use a modular system to keep everything clear, reusable, and maintainable.

I don’t know if this is the perfect setup, but it’s a start—and it’s already changing how I think about work. The goal isn’t just AI that performs tasks; it’s AI that understands what it needs to know, how we want it done, and in what format.. then executes accordingly. Everything else can evolve around that.